- Multi‑Agent Web Exploreration with Shared Graph Memory

- The Knowledge Layer

- Git Disasters and Process Debt

- Is Code Rotting Due To AI?

- The Integration Illusion

- When MCP Fails

- Context Engineering

- Stop Email Spoofing with DMARC

- SOTA Embedding Retrieval: Gemini + pgvector for Production Chat

- A Review of Agentic Design Patterns

- Building AI Agents for Automated Podcasts

- Rediscovering Cursor

- GraphRAG > Traditional Vector RAG

- Cultural Bias in LLMs

- Mapping out the AI Landscape with Topic Modelling

- Sustainable Cloud Computing: Carbon-Aware AI

- Defensive Technology for the Next Decade of AI

- Situational Awareness: The Decade Ahead

- Mechanistic Interpretability: A Survey

- Why I Left Ubuntu

- Multi-Agent Collaboration

- Building Better Retrieval Systems

- Building an Automated Newsletter-to-Summary Pipeline with Zapier AI Actions vs AWS SES & Lambda

- Local AI Image Generation

- Deploying a Distributed Ray Python Server with Kubernetes, EKS & KubeRay

- Making the Switch to Linux for Development

- Scaling Options Pricing with Ray

- The Async Worker Pool

- Browser Fingerprinting: Introducing My First NPM Package

- Reading Data from @socket.io/redis-emitter without Using a Socket.io Client

- Socket.io Middleware for Redux Store Integration

- Sharing TypeScript Code Between Microservices: A Guide Using Git Submodules

- ›Efficient Dataset Storage: Beyond CSVs

- Why I switched from Plain React to Next.js 13

- Deploy & Scale Socket.io Containers in ECS with Elasticache

- Implementing TOTP Authentication in Python using PyOTP

- Simplifying Lambda Layer ARNs and Creating Custom Layers in AWS

- TimeScaleDB Deployment: Docker Containers and EC2 Setup

- How to SSH into an EC2 Instance Using PuTTY

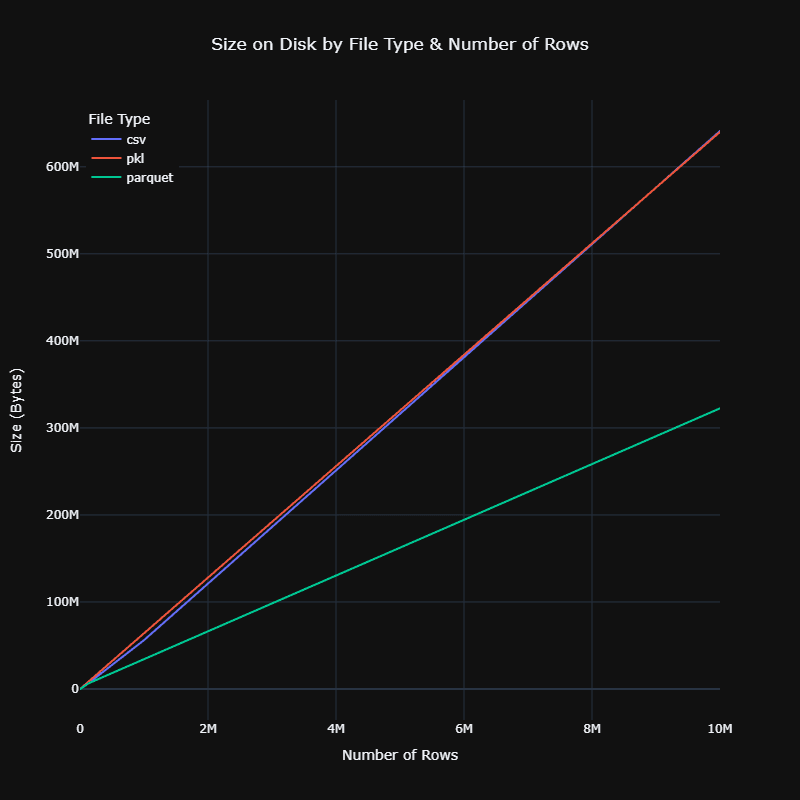

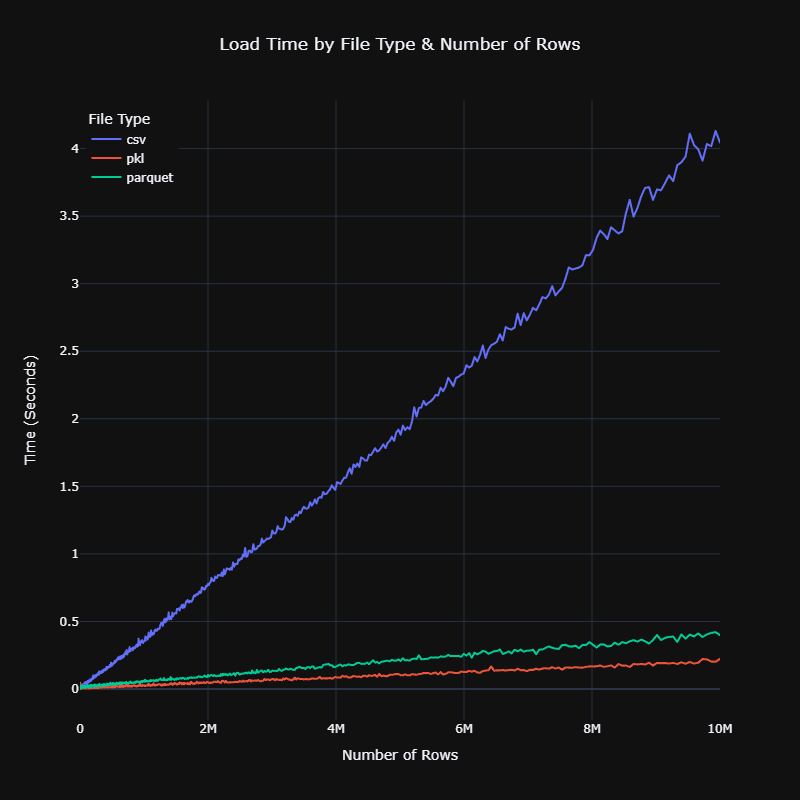

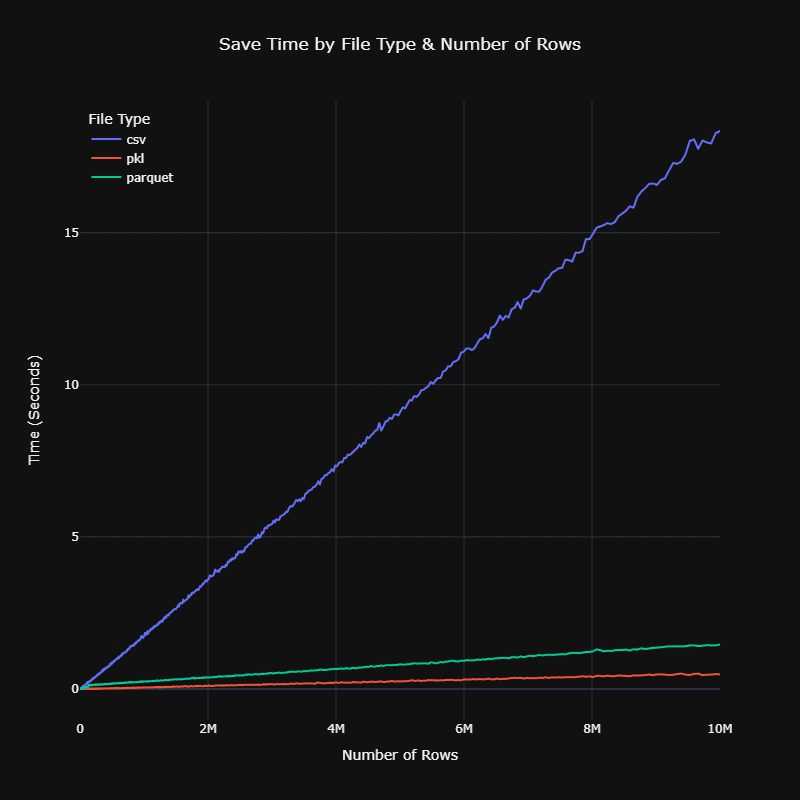

As a data scientist, one of the crucial aspects of your work is managing and storing datasets efficiently. CSVs may be common and handy when you want to share and read your data, but there are other file formats that are significantly more efficient in terms of speed and disk space.

In this article, I'll explore alternative file formats that can outperform CSVs, especially when dealing with larger datasets.

Performance Comparison

The data speaks for itself when comparing different storage formats across various dataset sizes:

Binary Format Advantages

Binary formats like Pickle and Parquet offer enhanced performance for both reading and compression. The key differences are:

- Pickle: Significantly faster to read/write than CSVs, though file sizes remain similar

- Parquet: Excels in reducing disk space usage with superior compression

- Feather: Another alternative offering better compression than CSV

Both binary formats maintain your data types automatically, eliminating the need to specify column types during loading. This is a significant advantage over CSVs, which store everything as strings by default.

Parquet's Additional Benefits

For a little extra load time, parquet will save substantial space on disk. This becomes increasingly important when working with larger datasets.

Parquet also supports column-wise reading, making it more efficient for large datasets when you only need specific columns:

# load certain columns of a parquet file

master_ref = pd.read_parquet('./datasets/master_ref.parquet',

columns=['short_1', 'short_2'])This selective loading capability can dramatically reduce memory usage and load times for wide datasets.

Conclusion

CSVs may not always be the best option, especially for large datasets. Consider binary formats for better speed and compression - your future self will thank you when working with production-scale data.

The choice between formats depends on your specific needs: use Pickle for maximum speed, Parquet for optimal storage efficiency, and CSVs only when human readability or cross-platform compatibility is essential.